Want More Money? Start Deepseek

페이지 정보

본문

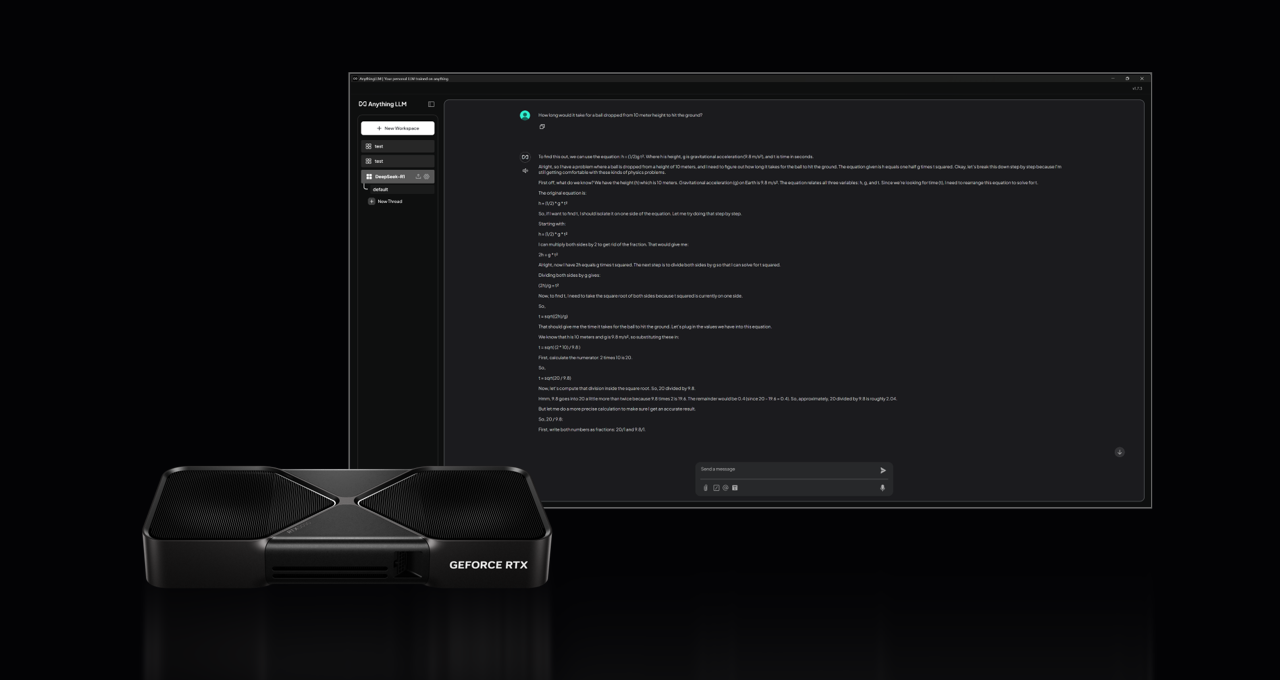

Through extensive testing and refinement, DeepSeek v2.5 demonstrates marked improvements in writing tasks, instruction following, and advanced problem-solving eventualities. While human oversight and instruction will remain crucial, the ability to generate code, automate workflows, and streamline processes promises to speed up product development and innovation. We further high quality-tune the bottom mannequin with 2B tokens of instruction knowledge to get instruction-tuned fashions, namedly DeepSeek-Coder-Instruct. For comparison, Meta AI's largest released model is their Llama 3.1 mannequin with 405B parameters. 391), I reported on Tencent’s massive-scale "Hunyuang" mannequin which will get scores approaching or exceeding many open weight models (and is a big-scale MOE-type mannequin with 389bn parameters, competing with models like LLaMa3’s 405B). By comparison, the Qwen family of fashions are very nicely performing and are designed to compete with smaller and more portable fashions like Gemma, LLaMa, et cetera. Then you might want to run the mannequin regionally. We live in a time the place there is so much data out there, however it’s not always simple to find what we'd like. So thanks so much for watching.

Through extensive testing and refinement, DeepSeek v2.5 demonstrates marked improvements in writing tasks, instruction following, and advanced problem-solving eventualities. While human oversight and instruction will remain crucial, the ability to generate code, automate workflows, and streamline processes promises to speed up product development and innovation. We further high quality-tune the bottom mannequin with 2B tokens of instruction knowledge to get instruction-tuned fashions, namedly DeepSeek-Coder-Instruct. For comparison, Meta AI's largest released model is their Llama 3.1 mannequin with 405B parameters. 391), I reported on Tencent’s massive-scale "Hunyuang" mannequin which will get scores approaching or exceeding many open weight models (and is a big-scale MOE-type mannequin with 389bn parameters, competing with models like LLaMa3’s 405B). By comparison, the Qwen family of fashions are very nicely performing and are designed to compete with smaller and more portable fashions like Gemma, LLaMa, et cetera. Then you might want to run the mannequin regionally. We live in a time the place there is so much data out there, however it’s not always simple to find what we'd like. So thanks so much for watching.

Thanks for watching. Appreciate it. DeepSeek represents the latest challenge to OpenAI, which established itself as an trade chief with the debut of ChatGPT in 2022. OpenAI has helped push the generative AI trade ahead with its GPT household of fashions, as well as its o1 class of reasoning fashions. This latest iteration maintains the conversational prowess of its predecessors while introducing enhanced code processing abilities and improved alignment with human preferences. You possibly can build the use case in a DataRobot Notebook utilizing default code snippets obtainable in DataRobot and HuggingFace, as properly by importing and modifying existing Jupyter notebooks. Whether you are a freelancer who needs to automate your workflow to speed issues up, or a big group with the task of speaking between your departments and 1000's of clients, Latenode can allow you to with one of the best resolution - for instance, fully customizable scripts with AI models like deep seek (https://bikeindex.org/users/deepseek1) Coder, Falcon 7B, or integrations with social networks, project administration services, or neural networks. You'll learn the most effective link constructing strategy to your web site, plus easy methods to shortly outrank your rivals in hyperlink building and how to show Seo site visitors based mostly on what's working for us. We'll show you how we take websites from zero to 145,000 business month and generate hundreds of thousands of dollars in gross sales and autopilot on this free hyperlink constructing acceleration session.

Thanks for watching. Appreciate it. DeepSeek represents the latest challenge to OpenAI, which established itself as an trade chief with the debut of ChatGPT in 2022. OpenAI has helped push the generative AI trade ahead with its GPT household of fashions, as well as its o1 class of reasoning fashions. This latest iteration maintains the conversational prowess of its predecessors while introducing enhanced code processing abilities and improved alignment with human preferences. You possibly can build the use case in a DataRobot Notebook utilizing default code snippets obtainable in DataRobot and HuggingFace, as properly by importing and modifying existing Jupyter notebooks. Whether you are a freelancer who needs to automate your workflow to speed issues up, or a big group with the task of speaking between your departments and 1000's of clients, Latenode can allow you to with one of the best resolution - for instance, fully customizable scripts with AI models like deep seek (https://bikeindex.org/users/deepseek1) Coder, Falcon 7B, or integrations with social networks, project administration services, or neural networks. You'll learn the most effective link constructing strategy to your web site, plus easy methods to shortly outrank your rivals in hyperlink building and how to show Seo site visitors based mostly on what's working for us. We'll show you how we take websites from zero to 145,000 business month and generate hundreds of thousands of dollars in gross sales and autopilot on this free hyperlink constructing acceleration session.

You'll get a free Seo domination plan to find the secrets of Seo hyperlink building. For example, in the U.S., DeepSeek's app briefly surpassed ChatGPT to claim the top spot on the Apple App Store's free functions chart. DeepSeek and ChatGPT are AI-driven language fashions that can generate text, assist in programming, or carry out analysis, amongst other things. This naive price will be introduced down e.g. by speculative sampling, however it gives a decent ballpark estimate. Well, I assume there's a correlation between the associated fee per engineer and the cost of AI training, and you can solely marvel who will do the next round of brilliant engineering. It can perceive natural language, whether you’re typing a question in simple English, using industry-specific terms, or even uploading footage or audio. The architecture is a Mixture of Experts with 256 specialists, using eight per token. A information on how one can run our 1.58-bit Dynamic Quants for DeepSeek-R1 utilizing llama.cpp. Data scientists can leverage its superior analytical features for deeper insights into giant datasets. The paper presents the CodeUpdateArena benchmark to check how properly giant language models (LLMs) can replace their data about code APIs which are constantly evolving.

Starcoder (7b and 15b): - The 7b model provided a minimal and incomplete Rust code snippet with solely a placeholder. On 1.3B experiments, they observe that FIM 50% generally does better than MSP 50% on both infilling && code completion benchmarks. It figures out the larger picture of what you’re asking, making it higher at dealing with tricky or unclear questions. Rich individuals can select to spend more cash on medical services with the intention to receive better care. Deepseek is a wise search platform that helps folks find data quickly and accurately. In case you have any stable information on the subject I would love to hear from you in personal, do a little bit of investigative journalism, and write up a real article or video on the matter. In case you have multiple GPUs, you can most likely offload more layers. If in case you have a GPU (RTX 4090 for example) with 24GB, you'll be able to offload a number of layers to the GPU for quicker processing. DeepSeek-VL (Vision-Language): A multimodal model capable of understanding and processing both textual content and visual data. Unlike common search engines like google and yahoo that mostly match keywords, deepseek ai china makes use of advanced expertise like artificial intelligence (AI), natural language processing (NLP), and machine learning (ML). Notably, DeepSeek-R1 leverages reinforcement learning and advantageous-tuning with minimal labeled data to considerably improve its reasoning capabilities.

- 이전글The Most Significant Issue With Double Glazing Door Lock, And How You Can Fix It 25.02.03

- 다음글The Unknown Benefits Of ADHD Symptoms In Women 25.02.03

댓글목록

등록된 댓글이 없습니다.